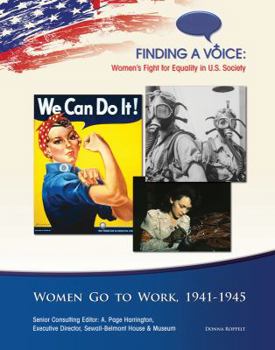

Women Go to Work: 1941-1945

(Part of the Finding a Voice: Women's Fight for Equality in U.S. Society Series)

The Second World War changed how the United States saw women's roles. Not only could women work, they could do work that men did. They could work in homes and hospitals, but they could also work in... This description may be from another edition of this product.

Format:Library Binding

Language:English

ISBN:1422223574

ISBN13:9781422223574

Release Date:January 2013

Publisher:Mason Crest Publishers

Length:64 Pages

Weight:0.70 lbs.

Dimensions:0.3" x 7.5" x 9.4"

Age Range:11 to 14 years

Grade Range:Grades 6 to 9

Customer Reviews

0 rating