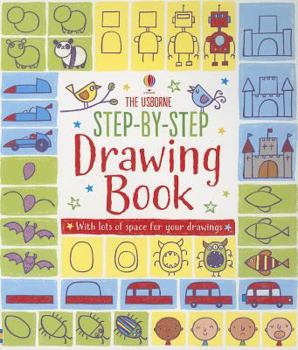

Step-By-step Drawing Book

From rockets to robots, monkeys to monsters and lots more, find out how to draw everything you've ever wanted to by following the simple step-by-step instructions in this inspirational draw-in book. Young children will feel a real sense of achievement mastering the simple drawing activities in this book. Includes plenty of space in the book for practising your own drawings. A fun pastime that adults are sure to enjoy, too This description may be from another edition of this product.

Format:Paperback

Language:English

ISBN:0794529534

ISBN13:9780794529536

Release Date:January 1

Publisher:Usborne Books

Length:96 Pages

Weight:1.12 lbs.

Dimensions:0.5" x 8.3" x 9.7"

You Might Also Enjoy

Customer Reviews

0 customer rating | 0 review

Rated 4 starsGreat book.

By Thriftbooks.com User,

Awesome book, bad price for used. You can get a brand new one from Usborne Books for 9.99

3Report