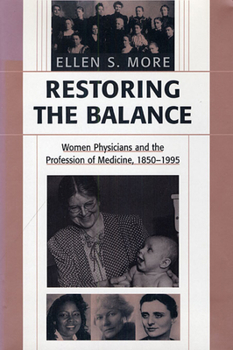

Restoring the Balance: Women Physicians and the Profession of Medicine, 1850-1995

Select Format

Select Condition

Book Overview

From about 1850, American women physicians won gradual acceptance from male colleagues and the general public, primarily as caregivers to women and children. By 1920, they represented approximately five percent of the profession. But within a decade, their niche in American medicine--women's medical schools and medical societies, dispensaries for women and children, women's hospitals, and settlement house clinics--had declined. The steady increase...

Format:Paperback

Language:English

ISBN:0674005678

ISBN13:9780674005679

Release Date:March 2001

Publisher:Harvard University Press

Length:352 Pages

Weight:1.10 lbs.

Dimensions:0.8" x 6.2" x 9.2"

Related Subjects

Clinical Gay & Lesbian Gender Studies History History & Philosophy Medical Medical Books Medicine Modern (16th-21st Centuries) Physician & Patient Politics & Social Sciences Reference Science Science & Math Science & Scientists Science & Technology Social Science Social Sciences Special Topics Textbooks Women's StudiesCustomer Reviews

0 rating