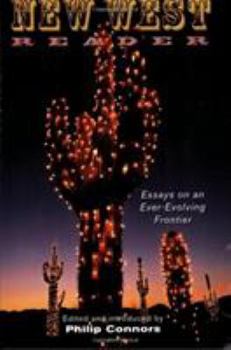

New West Reader: Essays on an Ever-Evolving Frontier

The West is vital to the myth of America. It is where radical individualism and beautiful landscapes merge in a sort of earthly paradise. Or so we've been led to believe by cinematic and literary... This description may be from another edition of this product.

Format:Paperback

Language:English

ISBN:1560256486

ISBN13:9781560256489

Release Date:October 2005

Publisher:Nation Books

Length:355 Pages

Weight:0.15 lbs.

Dimensions:1.0" x 5.5" x 8.2"

Customer Reviews

0 rating