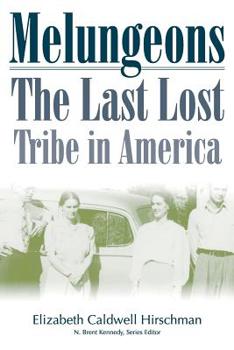

Melungeons: The Last Lost Tribe In America

(Part of the Melungeons Series)

Select Format

Select Condition

Book Overview

Most of us probably think of America as being settled by British, Protestant colonists who fought the Indians, tamed the wilderness, and brought "democracy"-or at least a representative republic-to... This description may be from another edition of this product.

Format:Paperback

Language:English

ISBN:0865548617

ISBN13:9780865548619

Release Date:November 2004

Publisher:Mercer University Press

Length:202 Pages

Weight:0.70 lbs.

Dimensions:0.6" x 6.2" x 8.8"

Customer Reviews

0 rating