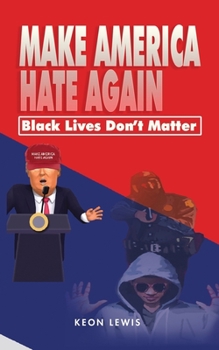

Make America Hate Again: Black Lives Don't Matter

What's the definition of make America great again? Is it 400yrs ago when America made slavery of black men & women legal? Is it in the 50's & 60's when segregation took place? Is it when lynchings of African Americans was a sport? All I saw was the hate for black people, you had white mobs killing innocent black people just because of their skin color. Fast forward, now you have white police officers killing innocent unarmed black men & women.

Format:Paperback

Language:English

ISBN:B08ZWFTD6B

ISBN13:9798722118295

Release Date:March 2021

Publisher:Independently Published

Length:158 Pages

Weight:0.36 lbs.

Dimensions:0.3" x 5.0" x 8.0"

Customer Reviews

0 rating