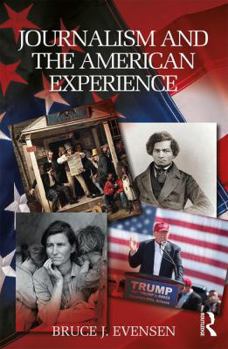

Journalism and the American Experience

Select Format

Select Condition

Book Overview

Journalism and the American Experience offers a comprehensive examination of the critical role journalism has played in the struggle over America's democratic institutions and culture. Journalism is central to the story of the nation's founding and has continued to influence and shape debates over public policy, American exceptionalism, and the meaning and significance of the United States in world history. Placed at the intersection of American...

Format:Paperback

Language:English

ISBN:1138044849

ISBN13:9781138044845

Release Date:February 2018

Publisher:Routledge

Length:404 Pages

Weight:1.30 lbs.

Dimensions:1.0" x 5.9" x 8.9"

Customer Reviews

0 rating