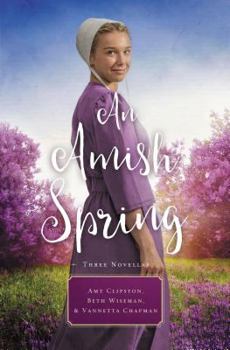

An Amish Spring

Select Format

Select Condition

You Might Also Enjoy

Book Overview

A Son for Always by Amy Clipston (Previously published in An Amish Cradle ) Carolyn and Joshua are thrilled to be expecting their first child together. Carolyn was a teenager when she had her son, Benjamin, and she feels solely responsible to secure his future. As Joshua watches Carolyn struggle to accept his support, he knows he must find some way to convince her that she--and Ben--will always be taken care of. A Love for Irma Rose by Beth Wiseman (Previously published in An Amish Year ) The year is 1957, and young Irma Rose has a choice to make. Date the man who is "right" for her? Or give Jonas a chance, the wild and reckless suitor who refuses to take no for an answer? Irma Rose steps onto the path she believes God has planned for her, but when she loses her footing, she is forced to rethink her choice. Where Healing Blooms by Vannetta Chapman (Previously published in An Amish Garden ) Widow Emma Hochstetter finds her quiet life interrupted when she discovers a run-away teenager in her barn, and then the bishop asks her to provide a haven for a local woman and her two children. Then, her mother-in-law, Mary Ann, reveals one of her garden's hidden secrets, something very unexpected.

Format:Hardcover

Language:English

ISBN:0195181727

ISBN13:9780195181722

Release Date:September 2011

Publisher:Academic

Length:512 Pages

Weight:1.86 lbs.

Dimensions:1.8" x 6.3" x 9.3"

More by Helen Ball

Customer Reviews

0 customer rating | 0 review

There are currently no reviews. Be the first to review this work.