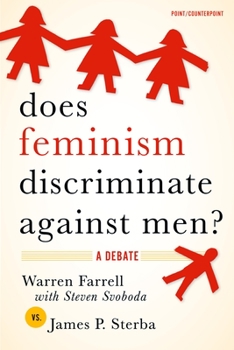

Does Feminism Discriminate Against Men?: A Debate

(Part of the Point/Counterpoint Series)

Select Format

Select Condition

Book Overview

Does feminism give a much-needed voice to women in a patriarchal world? Or is the world not really patriarchal? Has feminism begun to level the playing field in a world in which women are more often paid less at work and abused at home? Or are women paid equally for the same work and not abused more at home? Does feminism support equality in education and in the military, or does it discriminate against men by ignoring such issues as male-only draft...

Format:Paperback

Language:English

ISBN:019531283X

ISBN13:9780195312836

Release Date:October 2007

Publisher:Oxford University Press

Length:272 Pages

Weight:0.80 lbs.

Dimensions:0.5" x 6.0" x 8.4"

Customer Reviews

0 rating